Better Decisions, Faster Delivery, Stronger Teams

Unlock software delivery efficiency with data-driven insights. Improve planning, reduce risks, and enhance team performance with key agile metrics.

Track essential software engineering KPIs to improve your team's workflow and deliver higher-quality software. Learn how to optimize with key metrics.

This listicle reveals eight essential software engineering KPIs your team should track in 2025. By monitoring these metrics, you can identify bottlenecks, streamline processes, and validate agile effectiveness. Understanding software engineering KPIs empowers data-driven decisions, optimizing performance and ensuring you're building the right product, efficiently. From cycle time to defect density, we'll cover key indicators that help teams improve and deliver value faster. Tools like Umano automate measuring, analyzing, and acting on these KPIs.

Cycle time is a crucial software engineering KPI that measures the time it takes for a piece of work, such as a feature or bug fix, to move from the beginning to the end of the software development process. This typically encompasses the time from the initial coding stage to deployment in a production environment. Tracking cycle time allows teams to identify bottlenecks, optimize their workflow, and ultimately improve their overall development efficiency. By understanding how long different stages of the process take, teams can make data-driven decisions to streamline their operations and deliver value faster. This KPI is essential for any team looking to embrace agile methodologies and improve their responsiveness to market demands.

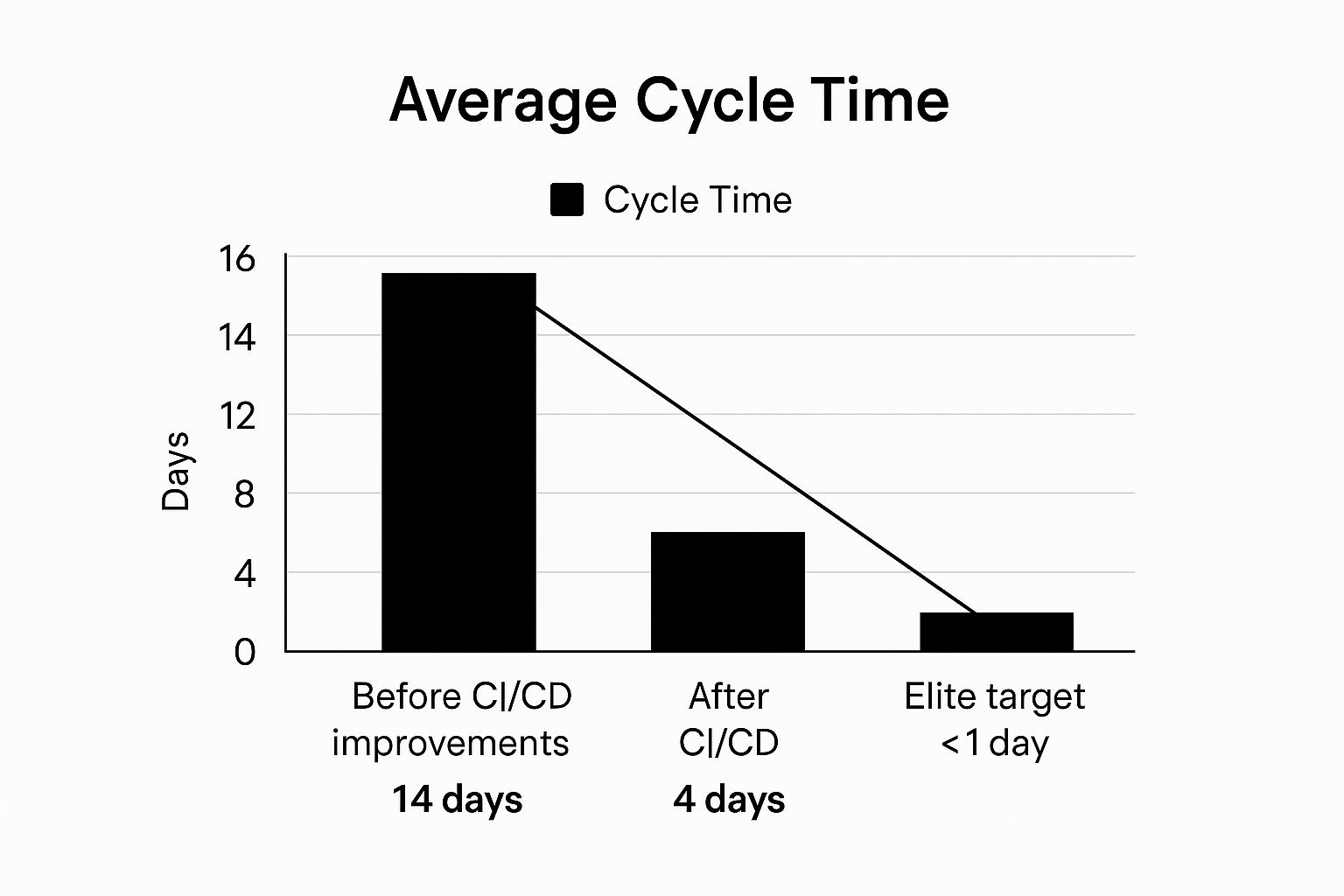

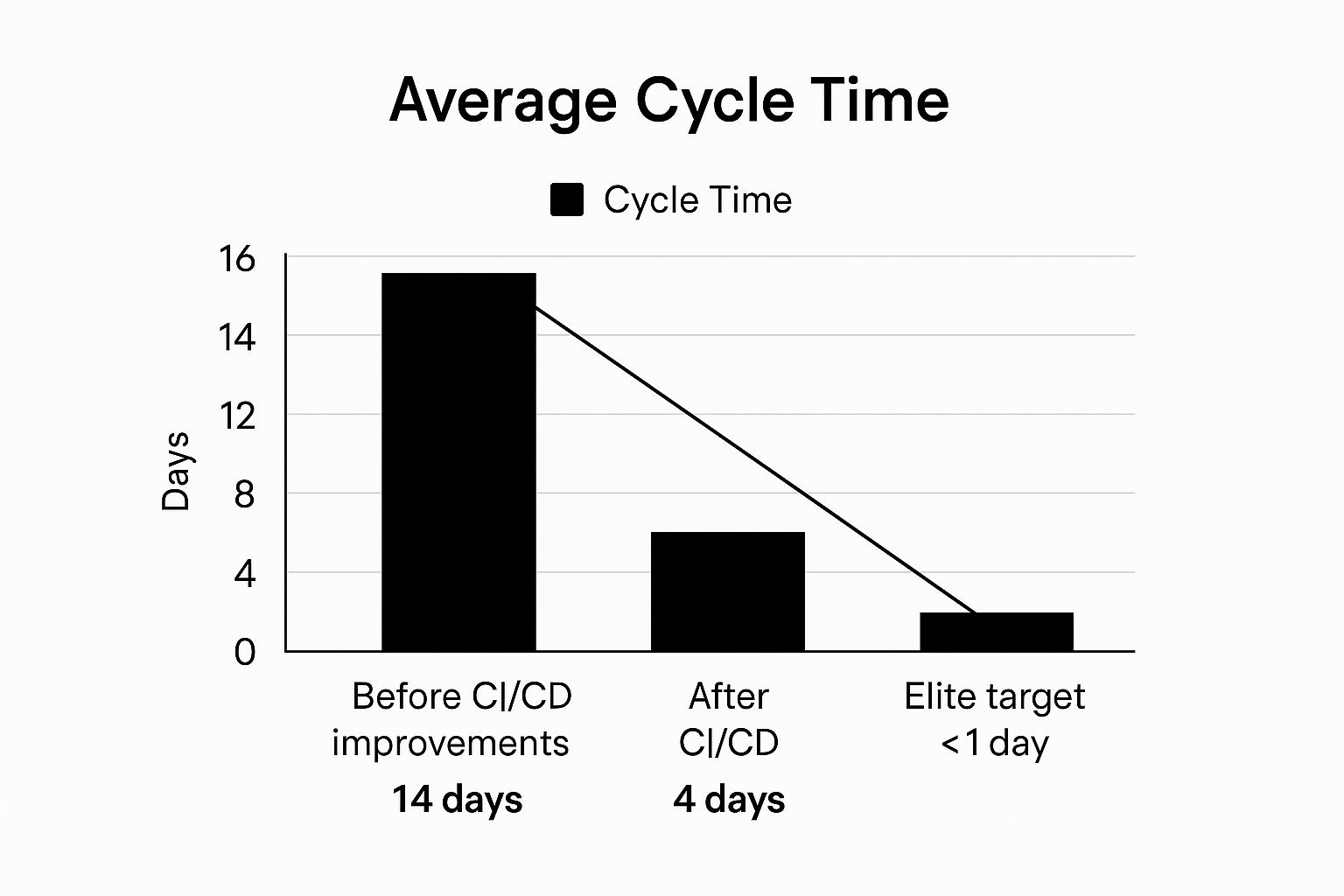

The infographic above visualizes the impact of optimizing cycle time. It shows a comparison of cycle time before and after implementing process improvements, breaking it down into key stages: coding, review, testing, and deployment. As you can see, optimizing each stage contributes to a significantly reduced overall cycle time. This chart clearly demonstrates the potential for improvement and how streamlining each step can lead to substantial gains in delivery speed.

Cycle time is often visualized using cumulative flow diagrams or control charts, which can reveal trends and variations over time. It's usually measured in days or hours and can be further broken down into individual stages such as coding, review, testing, and deployment to pinpoint specific areas for improvement. For example, if the testing phase consistently shows a longer cycle time than other phases, it signals a potential bottleneck that needs investigation. This granular approach allows teams to surgically address issues and achieve optimal efficiency. For example, Spotify successfully leveraged cycle time analysis to reduce their average cycle time from two weeks to just four days by implementing CI/CD practices and breaking down features into smaller, more manageable chunks. Similarly, both GitHub and Netflix actively monitor cycle time as a core metric to optimize their development processes and maintain a rapid release cadence.

Features and Benefits of Tracking Cycle Time:

Pros and Cons of Using Cycle Time:

Pros:

Cons:

Tips for Using Cycle Time Effectively:

Popularized By:

Cycle time deserves its place in the list of essential software engineering KPIs because it offers a highly effective way to measure and improve the speed and efficiency of the software development process. By focusing on this key metric, teams can optimize their workflows, deliver value faster, and ultimately increase customer satisfaction. This makes it a critical tool for any engineering manager, scrum master, or agile coach looking to enhance their team's performance. For Jira users, integrating cycle time tracking into their workflow becomes even more valuable, as it can be easily visualized and analyzed within the platform. This metric also resonates strongly with DevOps leaders and those working in Agile environments, where rapid iteration and continuous improvement are paramount. By understanding and utilizing cycle time effectively, organizations can gain a significant competitive advantage in today’s fast-paced software development landscape.

Deployment Frequency, a crucial software engineering KPI, measures how often code is successfully deployed to production. This metric provides valuable insights into a team's ability to deliver small batches of work quickly and efficiently, reflecting the maturity of their Continuous Integration and Continuous Delivery (CI/CD) practices. A higher deployment frequency generally correlates with better team performance, faster feedback loops, and more responsive product development. Tracking Deployment Frequency helps organizations understand their development velocity and identify areas for improvement in their software delivery lifecycle. This makes it an essential KPI for anyone involved in software development, from engineers to product managers.

This KPI typically counts successful deployments to production over a specific time period, such as a day, week, or month. It is a key metric within the DORA (DevOps Research and Assessment) framework, which provides a widely recognized benchmark for measuring software delivery performance. Deployment Frequency is often analyzed alongside the change failure rate to provide a comprehensive view of delivery speed and stability. This combined analysis helps teams understand if they are sacrificing quality for speed, or vice versa.

Features and Benefits:

Pros:

Cons:

Examples of Successful Implementation:

Actionable Tips:

When and Why to Use This Approach:

Deployment Frequency is a valuable KPI for any software development team aiming to improve their agility, speed, and responsiveness. It's particularly relevant for teams working in agile environments, practicing continuous delivery, or aiming to optimize their DevOps practices. By tracking and analyzing this metric, organizations can identify bottlenecks in their delivery pipeline, improve their CI/CD processes, and ultimately deliver value to their customers faster.

Popularized By:

Mean Time to Recovery (MTTR) is a crucial software engineering KPI that measures the average time it takes to restore service after a failure or outage. It's a key indicator of your team's ability to respond to incidents, the resilience of your systems, and a critical component of evaluating the effectiveness of your incident management process. For engineering managers, scrum masters, and DevOps leaders especially, understanding and optimizing MTTR is paramount for delivering reliable software and minimizing the impact of disruptions on users. MTTR is a valuable addition to any suite of software engineering KPIs because it directly reflects the speed and efficiency of your team's response to production issues.

MTTR tracks the time elapsed from the moment an incident is detected to the point when the service is fully restored and operational. This duration is usually measured in minutes or hours. A lower MTTR signifies better operational practices, a more robust incident response process, and more resilient software systems. In contrast, a high MTTR suggests potential weaknesses in your incident management procedures, monitoring systems, or system architecture itself. MTTR is also one of the four key DORA metrics for DevOps performance, highlighting its importance in modern software development practices.

How MTTR Works:

The calculation of MTTR involves summing the total downtime across multiple incidents and dividing it by the number of incidents. For example, if you have three incidents with downtimes of 30 minutes, 60 minutes, and 90 minutes, the MTTR is (30 + 60 + 90) / 3 = 60 minutes.

Features and Benefits:

Pros and Cons:

Pros:

Cons:

Examples of Successful Implementation:

Actionable Tips for Improvement:

When and Why to Use MTTR:

MTTR should be used whenever you need to understand and improve your incident response process. It's especially relevant for Agile teams, DevOps practitioners, and SREs focused on delivering reliable and highly available software. Tracking MTTR helps identify bottlenecks, prioritize improvements, and measure the impact of changes to your systems and processes. By consistently monitoring and optimizing MTTR, you can create a more resilient and responsive system, minimizing the impact of failures on your users and your business.

Change Failure Rate is a crucial software engineering KPI that measures the percentage of code changes causing failures, incidents, or rollbacks in production. This metric provides valuable insights into the effectiveness of your engineering practices, testing strategies, and the overall reliability of your deployment process. A lower change failure rate signifies higher code quality, more robust testing, and a more stable software delivery lifecycle, making it a vital metric for any software development team striving for operational excellence. This makes Change Failure Rate a critical KPI for understanding and improving the health of your software delivery process.

Change Failure Rate is calculated as (number of failed changes / total number of changes) × 100%. This calculation is typically performed over weekly or monthly periods to identify trends and track progress. As a core DORA metric for evaluating deployment safety, it is often segmented by change type, team, or service to pinpoint specific areas for improvement. For example, you might track the change failure rate for different feature releases, infrastructure updates, or individual teams. This granular approach allows for more targeted interventions and faster remediation of issues.

Why Use Change Failure Rate?

This KPI directly correlates with production stability and reliability. By monitoring and actively working to reduce your Change Failure Rate, you can significantly improve the user experience and reduce the costs associated with production incidents. It encourages better testing and quality assurance practices by highlighting the direct impact of insufficient testing on production stability. Further, Change Failure Rate helps evaluate the effectiveness of your CI/CD pipelines by identifying bottlenecks and areas where automation can be improved. It also provides early warning signs of technical debt accumulation. A consistently increasing Change Failure Rate can indicate that technical debt is hindering your ability to deliver changes reliably.

Pros:

Cons:

Examples of Successful Implementation:

Actionable Tips for Improvement:

Popularized By:

Change Failure Rate provides a quantifiable measure of your software delivery performance. By tracking and actively working to lower this metric, you can improve the stability and reliability of your software, leading to a better user experience and a more efficient development process. Its inclusion in the list of essential software engineering KPIs is undeniable due to its direct correlation with production stability and its ability to drive continuous improvement in engineering practices.

Code coverage, a crucial software engineering KPI, measures the percentage of your codebase executed during automated testing. This metric helps engineering teams, scrum masters, and agile coaches gain valuable insights into the thoroughness of their test suites and identify gaps in testing that could hide potential defects. While code coverage doesn't directly measure code quality, maintaining a high level of coverage provides confidence that code changes, particularly during rapid development cycles, won't introduce regressions or break existing functionality. This makes it a key metric for DevOps leaders and team leads in agile environments.

How Code Coverage Works:

Code coverage tools analyze your code and tests, tracking which lines, branches (conditional statements like if/else), and paths (combinations of branches) are executed during test runs. The results are typically expressed as a percentage. For instance, 80% branch coverage means 80% of the possible branches within your code were executed during testing. These results are often visually represented within IDEs and CI/CD pipelines using colored overlays (green for covered, red for uncovered) directly on the code itself, making it easy for software development teams to pinpoint untested areas.

Features and Benefits:

Pros and Cons:

Pros:

Cons:

Examples of Successful Implementation:

Actionable Tips:

Why Code Coverage Matters for Software Engineering KPIs:

Code coverage, while not the sole indicator of code quality, provides valuable insight into testing thoroughness and helps identify potential risk areas within a codebase. By tracking and improving code coverage, CTOs, product owners, and development teams can build more robust and reliable software, reduce the likelihood of regressions, and foster a culture of quality. Used effectively, code coverage is a powerful tool in the pursuit of delivering high-quality software.

Lead Time for Changes is a crucial software engineering KPI that measures the time elapsed between committing a code change and its successful deployment to production. This metric provides a holistic view of your software delivery pipeline's efficiency, revealing how quickly your organization can respond to evolving business needs and customer demands through software updates. It’s a key indicator of your team's agility and a critical factor in maintaining a competitive edge in today's fast-paced software development landscape. This KPI deserves its place on this list because it provides a high-level overview of delivery performance, allowing organizations to pinpoint bottlenecks and streamline their processes. For engineering managers, scrum masters, agile coaches, and DevOps leaders, understanding and optimizing Lead Time for Changes is essential for driving continuous improvement and achieving faster time to market.

How It Works:

Lead Time for Changes encompasses the entire software delivery lifecycle, starting from the moment a developer commits code to version control and ending when that change is successfully running in production. This includes all the intermediate steps like building, testing, integrating, and deploying the code. It is typically measured in hours, days, or weeks, with shorter lead times indicating a more efficient and streamlined process.

Features:

Pros:

Cons:

Examples of Successful Implementation:

Actionable Tips for Improvement:

When and Why to Use Lead Time for Changes:

Lead Time for Changes is a valuable KPI for any organization that develops and delivers software. It's particularly relevant for teams adopting agile and DevOps practices, where rapid and frequent deployments are a key objective. Tracking and optimizing this metric helps organizations:

Popularized By:

Technical Debt Ratio is a crucial software engineering KPI that quantifies the proportion of a codebase requiring refactoring or improvement. For engineering managers, scrum masters, and development teams striving for sustainable velocity, understanding and managing this KPI is essential for balancing short-term feature delivery with the long-term health of the software. It provides a measurable way to track the accumulated technical compromises – the "debt" – that can slow down future development, increase bug rates, and hinder innovation. This makes it a valuable addition to any suite of software engineering KPIs.

This metric helps teams understand how much "interest" they're paying on their technical debt in the form of reduced development speed, increased bug fixing time, and difficulty implementing new features. By monitoring technical debt, teams can make informed decisions about when and how to address it, preventing it from becoming an insurmountable obstacle.

How it Works:

Technical Debt Ratio is often calculated as:

(Remediation Cost / Development Cost) × 100%

The ratio represents the percentage of development effort that would theoretically be required to repay the accumulated technical debt.

Features:

Pros:

Cons:

Examples of Successful Implementation:

Actionable Tips:

Why This KPI Matters:

In the context of software engineering KPIs, Technical Debt Ratio offers a critical lens through which to view the long-term sustainability of a project. It aligns with agile principles by promoting iterative improvement and preventing the accumulation of problems that impede future progress. For engineering managers, product owners, and CTOs, this KPI provides valuable insights into the health of the codebase and allows for informed resource allocation decisions. By tracking and managing technical debt, teams can deliver high-quality software at a sustainable pace and avoid the crippling effects of unchecked technical compromises.

Defect Density is a crucial software engineering KPI that provides valuable insights into the quality of your codebase and the effectiveness of your development processes. It measures the number of confirmed defects found in a piece of software relative to its size. Tracking and analyzing defect density helps teams identify areas for improvement, predict maintenance efforts, and ultimately deliver a better user experience. This makes it a vital metric for Engineering Managers, Scrum Masters, Agile Coaches, Product Owners, CTOs, Software Development Teams, Jira Users, DevOps Leaders, and Team Leads in agile environments.

How it Works:

Defect density is calculated using a simple formula:

Defect Density = Number of Defects / Size of Software

The "size of software" is typically measured in thousands of lines of code (KLOC) or function points. While KLOC is more common, function points offer a more abstract and potentially more accurate measure of software size, independent of the programming language used.

Features and Benefits:

Pros:

Cons:

Examples of Successful Implementation:

Actionable Tips:

When and Why to Use This Approach:

Defect density is a valuable metric throughout the software development lifecycle. Track it during development, testing, and even in production to gain a comprehensive understanding of your software quality. It is particularly relevant for projects with stringent quality requirements, large codebases, or distributed teams.

Popularized By:

The concept of defect density has been popularized by influential figures and frameworks in the software engineering field, including:

By incorporating defect density as a key software engineering KPI, organizations can gain valuable data-driven insights into their development processes, ultimately leading to higher quality software, reduced maintenance costs, and improved customer satisfaction. This metric holds significant importance for anyone involved in the software development lifecycle, from individual developers to CTOs.

| KPI | Implementation Complexity 🔄 | Resource Requirements 💡 | Expected Outcomes 📊 | Ideal Use Cases 💡 | Key Advantages ⭐ |

|---|---|---|---|---|---|

| Cycle Time | Moderate – requires consistent tracking and stage breakdowns | Medium – tracking tools and process alignment | Clear insight into workflow efficiency and bottlenecks; faster delivery | Teams optimizing development pipeline and release speed | Identifies bottlenecks; predicts timelines; improves efficiency |

| Deployment Frequency | Low to Moderate – depends on automation maturity | Medium – automated deployment pipelines needed | Increased agility and delivery speed; frequent releases | Mature CI/CD teams aiming for rapid, small batch releases | Reflects delivery capability; encourages automation; boosts responsiveness |

| Mean Time to Recovery (MTTR) | Moderate – incident tracking and response automation required | Medium to High – monitoring, alerting, automated rollbacks | Faster service restoration; improved reliability and user experience | Operations teams focused on resilience and uptime | Improves incident response; fosters system resilience; enhances user trust |

| Change Failure Rate | Moderate – needs failure tracking and clear definitions | Medium – testing automation and failure analysis | Higher deployment reliability; improved code quality | Teams emphasizing safe deployments and quality assurance | Correlates with stability; highlights QA effectiveness; early risk warning |

| Code Coverage | Moderate – requires comprehensive automated testing adoption | High – test development and maintenance | Better test suite thoroughness; reduces regressions | Development teams driving test quality and maintainability | Measures test coverage objectively; uncovers untested code areas |

| Lead Time for Changes | High – spans multiple teams and pipelines | High – requires pipeline automation and cross-team coordination | Holistic delivery efficiency; faster response to business needs | Organizations focusing on overall delivery speed and agility | Holistic process visibility; identifies multi-stage bottlenecks |

| Technical Debt Ratio | Moderate to High – needs static analysis and regular tracking | Medium – tooling and dedicated refactoring time | Improved long-term maintainability; controlled codebase quality | Teams balancing short-term delivery with sustainability | Makes hidden costs visible; supports data-driven refactoring decisions |

| Defect Density | Moderate – defect tracking and size measurement required | Medium – defect logging and analysis tools | Objective software quality measurement; predicts maintenance effort | Quality assurance and release management teams | Enables quality benchmarking; identifies problematic areas |

Effectively leveraging software engineering KPIs is crucial for any team striving for continuous improvement and high-performance software delivery. From cycle time and deployment frequency to code coverage and defect density, the key takeaways from this article highlight the importance of selecting and tracking the right metrics to understand your team's strengths and weaknesses. Mastering the use of software engineering KPIs empowers teams to make data-driven decisions, optimize development processes, and ultimately deliver higher-quality software faster and more efficiently. By focusing on areas like reducing MTTR, minimizing change failure rate, and managing technical debt, organizations can achieve significant improvements in their software development lifecycle, resulting in increased customer satisfaction and a stronger competitive edge. Remember that the goal isn’t just to collect data, but to use it to drive actionable improvements, fostering a culture of continuous learning and optimization.

Want to streamline your software engineering KPI tracking and analysis? Umano integrates with your existing tools to automatically collect and visualize these critical metrics, providing you with actionable insights to drive engineering excellence. Visit Umano to learn more and unlock the full potential of your engineering data.

Unlock software delivery efficiency with data-driven insights. Improve planning, reduce risks, and enhance team performance with key agile metrics.

Transform your software delivery with our proven devops maturity model guide. Discover practical steps to assess and advance your team's capabilities.

Master the art of software project planning with actionable strategies and insights. Ensure your project's success - read the essential guide now!

Be the first to know about new B2B SaaS Marketing insights to build or refine your marketing function with the tools and knowledge of today’s industry.